AI Art – Quirky tools with a potential sting in their tail?

20/03/2023

Several tools have become available in the last couple of years, such as DALL-E, Stable Diffusion, Parti and MidJourney, that allow an image to be generated from a description. These tools use a huge amount of source image data combined with machine learning to generate an infinite amount of "original" artwork or imagery.

Over time these tools have continually improved, increasing the training data and processing power, to produce more and more impressive and realistic results.

DALL-E in action

In research we experimented with the DALL-E tool which was created by OpenAI. We deal with a large number of novel media and we thought it would be interesting to see whether we could generate an equivalent quality through one of these AI graphic tools. Attempting to replicate Harriet, our brand mascot, became a driving force!

Initially we started with Mini DALL-E (now called Craiyon), which has free access to generate images. We then applied for early access to DALL-E 2, which has since become available to the wider public. DALL-E 2 allows a few free requests per month, following that you need to pay.

We tried to replicate some of our marketing material relating to MHR's Harriet the Hamster. It isn’t what we were hoping for, but it gives you an idea of the quality you can get from this text prompt:

"A furry orange and white 3d hamster in the style of pixar that is smiling at the camera. It is wearing a jetpack with flames coming out of the exhaust."

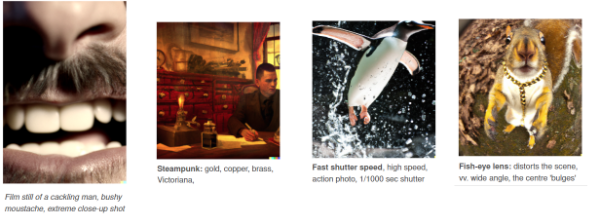

We found that, in general, the more you add to the description the better the image and sometimes even adding more technical photographic terms could help the quality of the final image. There are also certain genres and subject matters that work better than others, for example steampunk seems to be particularly well supported. Here are some examples taken from a DALL-E user guide to show the breadth of the potential descriptions.

Sometimes, the pictures can take many attempts to get a good result - the odds are the above images were the best out of dozens, if not hundreds, of attempts. Having said that, it does occasionally nail it, proving that getting a good description is extremely important!

The technology, while impressive, certainly isn't at a stage to replace an artist; the detail isn't there and perhaps worse still the replication isn't there. Even if you generated a perfect alternative to Harriet, you couldn't get it to then generate the same image in different scenarios - yet.

This highlights where the current strengths of the technology lie, not with replacing a graphic designer, but with giving them a tool for generating ideas and templates which they can then develop further. All the examples we tried have processed the image in the cloud, but already there are tools that can be run on a client machine (assuming it's powerful enough), which enable a more symbiotic relationship between the artist and the AI. This also underlies the fact that this is still a tool that requires mastering.

Midjourney

While writing the blog I did gain access to Midjourney, which was at the time in beta and tried similar experiments with it. As it's still fairly early access you currently interact with it through Discord, which can be a little clunky and feels more of a techy experience than DALL-E.

This was one of my first tries - as you can see, the result is incredibly impressive.

“Film still, gangster squirrel counting his money, low angle, shot from below, worm eye view - v 4”

While using Discord is a bit of a faff, you do have the advantage of seeing other people's requests and responses, many of which are amazing. It's also useful for picking up tips as to what to enter in your prompt for the best results.

Ethical and legal issues

Two main concerns have been raised with the image output from DALL-E and its competitors: who owns the responsibility for the image generated and who holds the copyright.

OpenAI has various content guidelines in place to prevent people from attempting to generate images containing sexual content, extreme violence, negative stereotypes, criminal offenses and other dubious output. In the early stages of its operation, after around three million images were generated, OpenAI reported that 0.05% of the generated images had potential guideline violations (around 450) and 30% of those resulted in accounts being blocked. While this number is very low, it does raise questions about who is responsible for the generated images - OpenAI or the users. Could an ambiguous or unintentionally worded description that generates an offensive image result in prosecution for the user or the owner? Some of the more advanced tools can take well known celebrity names and can place a recognisable representation of them in completely novel scenes. This has to raise the spectre of deep fakery as well as copyright infringement.

There is also the question of who owns the image from a commercial perspective. For example, if you generate an image that you want to include in some marketing material and a 3rd party wants to re-use that image, who owns it? Currently OpenAI claim the ownership, but legal experts believe it's not so straightforward and there will probably be legislative fall-out from this.

A second concern, flipping the perspective, is around the artwork that is utilised in the generation of the images. For example, you can ask DALL-E to generate any request in the style of any well-known painter and more often than not it will produce a decent effort. This is all fun if we're talking about a long dead artist such as Van Gogh or Picasso, but what about a current artist whose work will inevitably have been utilised in the training data to generate the image. Worst still for the thousands of graphic artists out there who put work into the public domain, expecting acknowledgment if anyone uses their work, they currently get nothing for something that could be heavily incorporating their work into a random image.

The future

This technology is improving rapidly and with so many competing companies (and in many cases, heavily funded companies) it's only a matter of time before the techniques become increasingly popular. Already text to video is being demoed which will open a whole new set of opportunities or a massive can of worms, depending on your point of view.

But there will be legal consequences. With the wealth of some of these companies it feels difficult to predict how these will be resolved, but the EU in particular holds a dim view of how these large organisations operate and it would be no surprise to see it weighed in on the issue. But for the time being, it's difficult not to be impressed by the quality of some of these images.